31:

Here’s that time of the year when you make those resolutions you’ll never gonna keep. I’ll make mine and I’ll try to stick to it, hopefully. Well, what is it? Very simple: I want to practice more and more regularly live coding in SuperCollider AND record audio sessions, and post them here in a section called “L!vE K@ding”. I’m not sure I will be posting codes about it, though, ’cause when I live code I get very messy. But I’ll be happy to discuss techniques, etc., what I do, and don’t. Let me add I’m not an expert at all, and there’s some great people out there doing excellent things. But you know what? If you never start, you’ll never learn. The session is not perfect? Who cares, that’s how “live” is. 😉

So, watch this space, if you are interested.

Meanwhile, Happy New Coding Year!

20:

In this post I want to talk about musical phrasings in algorithmic composition and algebra over finite fields. Yep, algebra, so brace yourself :-).

I am pretty sure you have been exposed to clock arithmetic: basically, you consider integer numbers modulo a natural number p. Now, in the case p is a prime, the commutative ring  is actually a field with finite elements. There is a name for that, and it is finite field, or Galois* field.

is actually a field with finite elements. There is a name for that, and it is finite field, or Galois* field.

Now consider a pxp-matrix A with entries in  : A will give us a map

: A will give us a map  from

from  to itself via

to itself via

where  are the entries of A. Now, consider a function f from

are the entries of A. Now, consider a function f from  to a finite set S, and consider the subgroup P of the group of permutations of p objects given by elements

to a finite set S, and consider the subgroup P of the group of permutations of p objects given by elements  satifying

satifying

If the function f is not injective, this subgroup will be not empty. Notice that for any function f of the type above, the matrix A gives us another function  defined as**

defined as**

Now we can ask ourselves: given a function f, can we find a matrix A such that

for some natural number k? What this means is that we want to find a matrix such that applied k-times to the function f returns the function itself. This problem is equivalent to finding a matrix A such that

In the case in which the function f is injective, this coincides with the problem of finding idempotents matrices in a finite field: the fantastic thing is that they are known to exist***, and even better their number is known in many situations!

“Ok, now, what all of this has to do with musical phrasing?! No, seriously, I’m getting annoyed, what really? ”

I hear your concern, but as with almost everything in life, it’s just a matter of perspective. So, consider the set S as a set of pairs (pitch, duration) for a note. A function f above will tell us in which order we play a note and what is the duration of each note: in other words, it is a musical phrasing. By picking a matrix A, we can generate from f a new phrasing, given by the function  , and we can reiterate the process. If A is such that it satisfies the condition above, after a finite number of steps, the phrasing will repeat. Hence, the following Supercollider code

, and we can reiterate the process. If A is such that it satisfies the condition above, after a finite number of steps, the phrasing will repeat. Hence, the following Supercollider code

s.boot;

SynthDef(\mall,{arg out=0,note, amp = 1;

var sig=Array.fill(3,{|n| SinOsc.ar(note.midicps*(n+1),0,0.3)}).sum;

var env=EnvGen.kr(Env.perc(0.01,1.2), doneAction:2);

Out.ar(out, sig*env*amp!2);

}).add;

(

var matrix, index, ind, notes, times, n, a;

notes = [48, 53, 52, 57, 53, 59, 60] + 12;

times = [1/2, 1/2, 1, 1/2, 1/2, 1, 1]*0.5;

n = 7;

matrix = Array.fill(n,{Array.fill(n, {rrand(0, n-1);})});

index = (0..(n-1));

a = Prout({

inf.do({

ind = [];

matrix.collect({|row|

ind = ind ++ [(row * index).sum % n];

((row * index).sum % n).yield;

});

index = ind;

});

});

Pbind(*[\instrument: \mall, \index: a, \note: Pfunc({|ev| notes[ev[\index]];}), \dur:Pfunc({|ev|

times[ev[\index]];})]).trace.play;

)

s.quit;

represents a “sonification” of the probability distribution of finding a matrix A with the properties above, for p=7. We are assuming that the various matrices have equal probability to be generated in the code above.

So, how does this sound?

Like this

Audio clip: Adobe Flash Player (version 9 or above) is required to play this audio clip. Download the latest version here. You also need to have JavaScript enabled in your browser.

*By the way, you should check out the life of Galois, just to crush all your stereotypes about mathematicians. 😉

**Mathematicians love these “dual” definitions.

***Apart from the identity matrix and elements of P themselves, clearly.

13:

It’s quite a while I have not written anything here: Python, ChucK and functional programming are keeping me quite busy. 😉

Here I want to tell you about Lindenmayer systems (or L-systems) and how to use dictionaries in SuperCollider to sonify them. So, first of all, what is an L-system? An L-system is a recursive system which is obtained from an alphabet, an axiom word in the alphabet, and a set of production rules. Starting from the axiom word, one applies iteratively the production rules to each character in the word at the same time, obtaining a new word which allows the procedure to be repeated.

A classical example is the following: consider the alphabet L={A,B}, the set of rules {A -> AB, B -> A}, and axiom word W=A. This will give the following words by iteration

A

AB

ABA

ABAAB

ABAABABA

and so on.

You are probably thinking “Wait a minute: alphabets, words, formation rules? Has this all anything to do with languages?” And the answer is: “Yes! But…”, the main difference with (formal) languages being that the production rules in a formal grammar don’t need to be applied all at the same time.

Ok, all well and good, but you may ask “What is this all for?! I mean, why?!?”, and I suspect the fact that, for instance, the sequence of the length of the words in the above example gives you the Fibonacci sequence is not an appropriate answer.  The point is that Lindenmayer came to realize that these iterative systems can describe nicely the growth of many types of plants and their branching structures. For instance, the example above describes the growth of algae. The truly surprising thing, also, is that L-systems can be used to generate all* the types of known fractals, like Mandelbrot, Koch curve, the Sierpinski triangle, etc., when the alphabet is appropriately geometrized. So. in a nutshell, L-systems are very cool things. 😉

The point is that Lindenmayer came to realize that these iterative systems can describe nicely the growth of many types of plants and their branching structures. For instance, the example above describes the growth of algae. The truly surprising thing, also, is that L-systems can be used to generate all* the types of known fractals, like Mandelbrot, Koch curve, the Sierpinski triangle, etc., when the alphabet is appropriately geometrized. So. in a nutshell, L-systems are very cool things. 😉

What about dictionaries, then? Dictionaries are powerful objects in many programming languages, and are sort of arrays in which elements can be indexed with keys which are different from the index position of the element itself. They can be, for instance, strings or symbols**. Basically, they are perfect to work as mappings.

So, let’s look at the code

s.boot;

(

~path = "pathtofilefolder";

~kick = Buffer.read(s,~path ++ "sampleaddress");

~hhat = Buffer.read(s,~path ++ "sampleaddress");

~clap = Buffer.read(s,~path ++ "sampleaddress");

SynthDef(\playbuf,{arg out = 0, r = 1, buff = 0, amp = 1, t = 0;

var sig = PlayBuf.ar(1, buff, r);

var env = EnvGen.kr( Env.perc(0.01, 0.8), doneAction: 2);

Out.ar(out, sig*env*amp*t!2);

}).add;

SynthDef(\mall,{arg out=0,note, amp = 1;

var sig=Array.fill(3,{|n| SinOsc.ar(note.midicps*(n+1),0,0.3)}).sum;

var env=EnvGen.kr(Env.perc(0.01,0.8), doneAction:2);

Out.ar(out, sig*env*amp!2);

}).add;

)

(

var dict = IdentityDictionary[\A -> "AB", \B -> "A", \C -> "DB", \D -> "BC"]; //These are the production rules of the L-system

var word = "AC"; //Axiom word

var string_temp = "";

var iter = 10;

//These are diction for the mapping of the alphabet to "artistic" parameters: degrees in a scale, beat occurrence, etc.

var dictnotes = IdentityDictionary[\A -> 50, \B -> 55, \C -> 54, \D -> 57];

var dictkick = IdentityDictionary[\A -> 1, \B -> 0, \C -> 1, \D -> 0];

var dicthat = IdentityDictionary[\A -> 1, \B -> 0, \C -> 1, \D -> 1];

var notes=[];

var beat=[];

var beat2=[];

//This iteration generates the system recursively

iter.do({

word.asArray.do({|i|

string_temp = string_temp ++ dict[i.asSymbol];

});

word = string_temp;

string_temp = "";

});

word.postln;

//Here we map the final system to the parameters as above

word.do({|i| notes = notes ++ dictnotes[i.asSymbol];});

word.do({|i| beat = beat ++ dictkick[i.asSymbol];});

word.do({|i| beat2 = beat2 ++ dicthat[i.asSymbol];});

notes.postln;

beat.postln;

s.record;

Pbind(*[\instrument: \mall, \note: Pseq(notes,inf), \amp: Pfunc({rrand(0.06,0.1)}), \dur: 1/4]).play(quant:32);

Pbind(*[\instrument: \mall, \note: Pseq(notes + 48,inf), \amp: Pfunc({rrand(0.06,0.1)}),\dur: 1/8]).play(quant:32 + 16);

Pbind(*[\instrument: \playbuf, \t: Pseq(beat,inf), \buff: ~kick, \dur: 1/8]).play(quant:32 + 32);

Pbind(*[\instrument: \playbuf, \t: Pseq(beat2,inf), \buff: ~hhat, \r:8, \dur: 1/8]).play(quant:32 + 32 + 8);

Pbind(*[\instrument: \playbuf, \t: Prand([Pseq([0,0,1,0],4), Pseq([0,1,0,0],1)],inf), \buff: ~clap, \r: 1,\dur: 1/4]).play(quant:32 + 32 + 8);

)

The code above is in a certain sense a “universal L-systems generator”, in the sense that you can change the alphabet, the production rules and the axiom and get your own system. A generalisation of L-systems are “probabilistic L-systems”, in which the production rules are weighted with a certain probability to happen. This is maybe the topic for another post, though. 😉

While listening to this, notice how the melodies and the beats tend to self replicate, but with “mutations”.

Audio clip: Adobe Flash Player (version 9 or above) is required to play this audio clip. Download the latest version here. You also need to have JavaScript enabled in your browser.

*I don’t know any mathematical proof of this statement.

**I have used Identity Dictionaries here, where keys are symbols.

01:

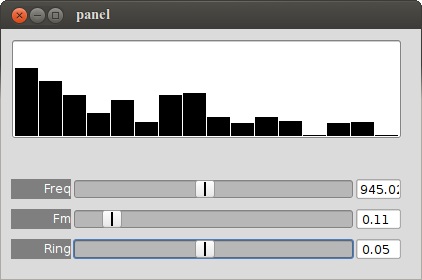

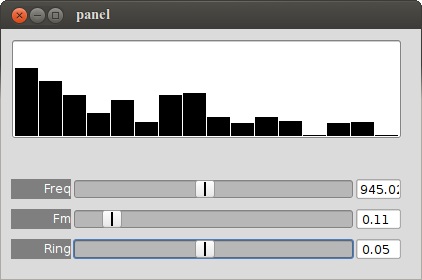

When I started learning SuperCollider, I was motivated more by algorithmic and generative music. I was (and still am) fascinated by bits of code that can go on forever, and create weird soundscapes. Because of this, I never payed attention to build graphical interfaces. Another reason why I stayed away from interfaces was that I was using Pure Data before, which is an environment in which building the interfaces and coding are, from the user’s perspective, essentially the same thing. While it was fun for a while, I always got frustrated when, after spending some time building a patch, I wanted to scale it up: in most of the cases, I ended up with the infamous spaghetti monster. Studying additive synthesis was such a situation: once you understand the concept of a partial, and how to build a patch say with 4 of them, then extending it is only a matter of repeating the same job*. It’s almost a monkey job: enter the computer!

The code here shows exactly this paradigm: yes, you’ll have to learn a bunch of new classes, like Window, to even be able to see a slider. But after that, it’ll scale up easy peasy, i.e. in this case via the variable ~partials. So, as a sort of rule of thumb: if you think you will need to scale up your patch/code, then go for a text oriented programming language as opposed to a graphical environment. The learning curve is higher, but it’ll pay off.

s.boot;

(

//Setting the various Bus controls;

~freq=Bus.control(s);

~fm=Bus.control(s);

~ring=Bus.control(s);

~partials=16; //Number of partials;

~partials.do{|n|

~vol=~vol.add(Bus.control(s));

};

//Define the main Synth;

SynthDef(\harm,{arg out=0;

var vol=[];

var sig;

~freq.set(60);

sig=Array.fill(~partials,{|n| SinOsc.ar(Lag.kr(~freq.kr,0.1)*(n+1)+(SinOsc.kr(~freq.kr,0,1).range(0,1000)*(~fm.kr)),0,1)});

~partials.do{|n|

vol=vol.add(Lag.kr(~vol[n].kr,0.05));

};

Out.ar(out,(sig*vol).sum*(1+(SinOsc.ar(10,0,10)*Lag.kr(~ring.kr,0.1)))*0.1!2);

}).add;

//Build the Graphical interface;

w = Window(bounds:Rect(400,400,420,250)); //Creates the main window

w.front;

w.onClose_({x.free});

//Add the multislider;

n=~partials+1;

m = MultiSliderView(w,Rect(10,10,n*23+0,100));

m.thumbSize_(23);

m.isFilled_(true);

m.value=Array.fill(n, {|v| 0});

m.action = { arg q;

~partials.do{|n|

~vol[n].set(q.value[n]);

};

q.value.postln;

};

//Add sliders to control frequency, fm modulation, and ring modulation;

g=EZSlider(w,Rect(10,150,390,20),"Freq",ControlSpec(20,2000,\lin,0.01,60),{|ez| ~freq.set(ez.value);ez.value.postln});

g.setColors(Color.grey,Color.white);

g=EZSlider(w,Rect(10,180,390,20),"Fm",ControlSpec(0,1,\lin,0.01,0),{|ez| ~fm.set(ez.value);ez.value.postln});

g.setColors(Color.grey,Color.white);

g=EZSlider(w,Rect(10,210,390,20),"Ring",ControlSpec(0,0.1,\lin,0.001,0),{|ez| ~ring.set(ez.value);ez.value.postln});

g.setColors(Color.grey,Color.white);

//Create the synth

x=Synth(\harm);

)

s.quit;

If everything went according to plan, you should get this window

*Yes, I know the objections: you should have used encapsulations, macros, etc., but this didn’t do for me, because I rarely know what I’m going to obtain. “An LFO here? Sure I want it! Oh, wait, I have to connect again all these lines, for ALL partials?! Damn!”

22:

It’s always a good practice to take a simple idea, and look at it from a different perspective. So, I went back to the ambient code of my last post, and built a little variation. If you check that code, you’ll see that the various synths are allocated via a routine in a scheduled way, and it would go on forever. The little variation I came up with is the following: instead of allocating a synth via an iterative procedure which is independent of the synths playing, we will allocate new synths provided the amplitude of the playing synth exceeds a certain value. In plain English: “Yo, synth, every time your amplitude is greater than x, do me a favor darling, allocate a new synth instance. Thanks a lot!”. But how do we measure the amplitude of a synth? Is that possible? Yes, it is: the UGen Amplitude does exactly this job, being an amplitude follower. Now we are faced with a conceptual point, which I think it’s quite important, and often overlooked. SuperCollider consists a two “sides”: a language side, where everything related to the programming language itself takes place, and a server side, where everything concerning audio happens, and in particular UGens live. So, when you allocate a synth, the client side is sending a message to the audio server with instructions about the synth graph (i.e. how the various UGens interact with each others) to create. Since Amplitude lives on the server side, we need to find a way to send a message from the server side to the client side. Since in this case the condition we have, i.e. amplitude > x, is a boolean condition*, and we want to allocate a single synth every time the condition holds, we can use the UGen SendTrig, which will send an OSC message to the client side, which in turn will trigger the allocation of a new synth via an OSC function. We have almost solved the problem: indeed, SendTrig will send triggers continuosly (either at control rate or audio rate), while we need only 1 trigger per condition. For this, we can use a classic “debouncing” technique: we measure the time from a trigger to the previous one via the Timer UGen, check if “enough” time has passed, and only then allow a new trigger. The value chosen here is such that only one trigger per synth should happen. I say should, because in this case I end up getting two triggers per synth, which is not what I want… I don’t know really why this happens, but in the code you find a shortcut to solve this problem: simply “mark” the two triggers, and choose only the second one, which is accomplished by the “if” conditional in the OSC function. Of course, this little example opens the way to all cool ideas, like controlling part of synths or generate events via a contact microphone, etc. etc.

s.boot;

(

~out=Bus.audio(s,2);

//Synth definitions

SynthDef(\pad,{arg out=0,f=#[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0 ],d=0,a=0.1,amp=0.11;

var sig=0;

var sig2=SinOsc.ar(f[15],0,0.08);

var env=EnvGen.kr(Env.linen(6,7,10),doneAction:2);

var amplitude;

var trig;

var trigCon;

var timer;

sig=Array.fill(16,{|n| [SinOsc.ar(f[n]+SinOsc.ar(f[n]*10,0,d),0,a),VarSaw.ar(f[n]+SinOsc.ar(f[n]*10,0,d),0,0.5,a),LFTri.ar(f[n]+SinOsc.ar(f[n]*10,0,d),0,a),WhiteNoise.ar(0.001)].wchoose([0.33,0.33,0.33,0.01])});

sig=Splay.ar(sig,0.5);

amplitude=Amplitude.kr((sig+sig2)*env);

trig=amplitude > amp;

timer=Timer.kr(trig);

trigCon=(timer>4)*trig;

SendTrig.kr(trigCon,0,Demand.kr(trigCon,0,Dseq([0,1])));

Out.ar(out,((sig+sig2)*env)!2);

}).add;

SynthDef(\out,{arg out=0,f=0;

var in=In.ar(~out,2);

in=RLPF.ar(in,(8000+f)*LFTri.kr(0.1).range(0.5,1),0.5);

in=RLPF.ar(in,(4000+f)*LFTri.kr(0.2).range(0.5,1),0.5);

in=RLPF.ar(in,(10000+f)*LFTri.kr(0.24).range(0.5,1),0.5);

in=in*0.5 + CombC.ar(in*0.5,4,1,14);

in=in*0.99 + (in*SinOsc.ar(10,0,1)*0.01);

Out.ar(out,Limiter.ar(in,0.9));

}).add;

//Choose octaves;

a=[72,76,79,83];

b=a-12;

c=b-12;

d=c-12;

//Define the function that will listen to OSC messages, and allocate synths

o = OSCFunc({ arg msg, time;

[time, msg].postln;

if(msg[3] == 1,{Synth(\pad,[\f:([a.choose,b.choose] ++ Array.fill(5,{b.choose}) ++ Array.fill(5,{c.choose}) ++ Array.fill(4,{d.choose})).midicps,\a:0.1/rrand(2,4),\d:rrand(0,40),\amp:rrand(0.10,0.115),\out:~out]);});

},'/tr', s.addr);

)

//Set the main out

y=Synth(\out);

Synth(\pad,[\f:([a.choose,b.choose] ++ Array.fill(5,{b.choose}) ++ Array.fill(5,{c.choose}) ++ Array.fill(4,{d.choose})).midicps,\a:0.1/rrand(2,4),\d:rrand(0,40),\amp:rrand(0.10,0.115),\out:~out]);

o.free;

y.free;

s.quit;

Here’s how it sounds. Notice that the audio finished on itself, there was no interaction on my side.

Audio clip: Adobe Flash Player (version 9 or above) is required to play this audio clip. Download the latest version here. You also need to have JavaScript enabled in your browser.

*Technically speaking, it’s not a boolean condition, since there are no boolean entities at server side. Indeed, amplitude > amp is a Binary Operator UGen… For this type of things, the Supercollider Book is your dearest friend.

09:

It’s been a while since my last post, but I’ve been busy with the release of an Ep. And work. And other things. 😉

Here’s a code for all ambient soundscape lovers, which shows the power of code based programming languages. The code is not the cleanest, but I hope you get the idea, i.e. tons of oscillators, and filters!

I like here that the long drones are different in textures due to the fact that the single oscillators are randomly chosen.

s.boot;

~out=Bus.audio(s,2);

//Synth definitions

SynthDef(\pad,{arg out=0,f=#[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0 ],d=0,a=0.1;

var sig=0;

var sig2=SinOsc.ar(f[15],0,0.08);

var env=EnvGen.kr(Env.linen(6,7,10),doneAction:2);

sig=Array.fill(16,{|n| [SinOsc.ar(f[n]+SinOsc.ar(f[n]*10,0,d),0,a),VarSaw.ar(f[n]+SinOsc.ar(f[n]*10,0,d),0,0.5,a),LFTri.ar(f[n]+SinOsc.ar(f[n]*10,0,d),0,a),WhiteNoise.ar(0.001)].wchoose([0.33,0.33,0.33,0.01])});

sig=Splay.ar(sig,0.5);

Out.ar(out,((sig+sig2)*env)!2);

}).add;

SynthDef(\out,{arg out=0,f=0;

var in=In.ar(~out,2);

in=RLPF.ar(in,(8000+f)*LFTri.kr(0.1).range(0.5,1),0.5);

in=RLPF.ar(in,(4000+f)*LFTri.kr(0.2).range(0.5,1),0.5);

in=RLPF.ar(in,(10000+f)*LFTri.kr(0.24).range(0.5,1),0.5);

in=in*0.5 + CombC.ar(in*0.5,4,1,14);

in=in*0.99 + (in*SinOsc.ar(10,0,1)*0.01);

Out.ar(out,Limiter.ar(in,0.9));

}).add;

//Choose octaves;

a=[72,76,79,83];

b=a-12;

c=b-12;

d=c-12;

//Set the main out

y=Synth(\out);

//Main progression

t=fork{

inf.do({

y.set(\f,rrand(-1000,1000));

Synth(\pad,[\f:([a.choose,b.choose] ++ Array.fill(5,{b.choose}) ++ Array.fill(5,{c.choose}) ++ Array.fill(4,{d.choose})).midicps,\a:0.1/rrand(2,4),\d:rrand(0,40),\out:~out]);

rrand(5,10).wait;})

};

t.stop;

s.quit;

Of course, you could easily generalize this to a larger number of oscillators, filters, etc.

And even more, consider this just as a base for further sound manipulation, i.e. stretch it, granulize it, etc.

Experiment. Have fun. Enjoy.

Audio clip: Adobe Flash Player (version 9 or above) is required to play this audio clip. Download the latest version here. You also need to have JavaScript enabled in your browser.

23:

It has been a while, but I have been busy with a new work to be released in July, and recently I have spent some time fiddleing with Reaktor, which I have to say is quite good fun.

So, here’s something very simple and a classic in generative music, i.e. a wind chimes generator. I have decided to use only one graph synth in Supercollider (everything happens at “server side”), using a geiger generator (Dust) with varying density as a master clock, and a Demand Ugen as pitch selector. There is also a bit of FM synthesis, which is a nice way to get “tubular” type of sounds in this case. Finally, some delay and reverb.

s.boot;

{

var pitch,env,density,scale,root,sig,mod,trig,pan,volenv;

volenv=EnvGen.kr(Env.linen(10,80,20),1,doneAction:2);

density=LFTri.kr(0.01).range(0.01,2.5);

trig=Dust.kr(density);

root=Demand.kr(trig,0,Drand([72,60,48,36],inf));

pan=TRand.kr(-0.4,0.4,trig);

scale=root+[0,2,4,5,7,9,11,12];

pitch=Demand.kr(trig,0,Drand(scale,inf));

env=EnvGen.kr(Env.perc(0.001,0.3),trig);

mod=SinOsc.ar(pitch.midicps*4,0,800)*env;

sig=SinOsc.ar(pitch.midicps+mod,0,0.2);

FreeVerb.ar(CombL.ar(Pan2.ar(sig*env,pan),5,0.2,5))*volenv;

}.play;

s.quit;

Here’s how it sounds

Audio clip: Adobe Flash Player (version 9 or above) is required to play this audio clip. Download the latest version here. You also need to have JavaScript enabled in your browser.

05:

I am not usually excited about the sketches I make in Processing: I mean, I do like them, but I always think they could have come out better, somehow.

This one, instead, I really like, and I’m quite happy about. It is the outcome of playing with an amazing particle systems library for Processing, called toxiclibs.

The whole idea is particularly simple: take a huge number of points, and connect them with springs with a short rest length, so to form a circular shape. Moreover, add an attraction towards the center, and start the animation with the particles far from the center. They’ll start bouncing under elastic forces and the attraction to the center until the circular shape becomes relatively small. The interesting choice here, from the visual perspective, was not to “represent” (or draw) the particles, but rather the springs themselves. One of the important feature of the animation, in this case, is to be able to slowly clean the screen from what has been drawn on it, otherwise you’ll end up with a huge white blob (not that pleasant, is it?). This is accomplished by the function fadescr, which goes pixel by pixel and slowly turns it to the value 0 in all of its colour channels, so basically fading the screen to black: all the >> and << binary operations are there to speed up things, which is crucial in these cases.

Why not using the celebrated "rectangle over the screen with low alpha"? Because it produces "ghosts", or graphic artifacts. See here for a nice description.

I really like the type of textures the animation develops, it gives me the idea of smooth silk, smoke or… flares. 😉

import toxi.physics2d.*;

import toxi.physics2d.behaviors.*;

import toxi.geom.*;

VerletPhysics2D physics;

int N=2000;

VerletParticle2D[] particles=new VerletParticle2D[N];

VerletParticle2D center;

float r=200;

void setup(){

size(600,600);

background(0);

frameRate(30);

physics=new VerletPhysics2D();

center=new VerletParticle2D(new Vec2D(width/2,height/2));

center.lock();

AttractionBehavior behavior = new AttractionBehavior(center, 800, 0.01);

physics.addBehavior(behavior);

beginShape();

noFill();

stroke(255,5);

for (int i=0;i<particles.length;i++){

particles[i]=new VerletParticle2D(new Vec2D(width/2+(r+random(10,30))*cos(radians(i*360/N)),height/2+(r+random(10,30))*sin(radians(i*360/N))));

vertex(particles[i].x,particles[i].y);

}

endShape();

for (int i=0;i<particles.length;i++){

physics.addParticle(particles[i]);

};

for (int i=1;i<particles.length;i++){

VerletSpring2D spring=new VerletSpring2D(particles[i],particles[i-1],10,0.01);

physics.addSpring(spring);

};

VerletSpring2D spring=new VerletSpring2D(particles[0],particles[N-1],20,0.01);

physics.addSpring(spring);

};

void draw(){

physics.update();

beginShape();

noFill();

stroke(255,10);

for (int i=0;i<particles.length;i++){

vertex(particles[i].x,particles[i].y);

}

endShape();

if (frameCount % 3 == 0)

{

fadescr(0,0,0);

};

};

void fadescr(int r, int g, int b) {

int red, green, blue;

loadPixels();

for (int i = 0; i < pixels.length; i++) {

red = (pixels[i] >> 16) & 0x000000ff;

green = (pixels[i] >> 8) & 0x000000ff;

blue = pixels[i] & 0x000000ff;

pixels[i] = (((red+((r-red)>>8)) << 16) | ((green+((g-green)>>8)) << 8) | (blue+((b-blue)>>8)));

}

updatePixels();

}

Here’s a render of the animation

The audio has been generated in SuperCollider, using the following code

s.boot;

~b=Bus.audio(s,2);

File.openDialog("",{|path|

~path=path;

~buff=Buffer.read(s,path);

})

20.do({

~x=~x.add(Buffer.read(s,~path,rrand(0,~buff.numFrames)));

})

(

SynthDef(\playbuff,{arg out=0,in=0,r=1,p=0;

var sig=PlayBuf.ar(1,in,r,loop:1);

var env=EnvGen.kr(Env([0,1,1,0],[0.01,Rand(0.1,1),0.01]),gate:1,doneAction:2);

Out.ar(out,Pan2.ar(sig*env*3,pos:p));

}).add;

SynthDef(\synth,{arg out=0;

var sig=VarSaw.ar(90,0,0.5*LFTri.kr(0.1).range(1,1.2),mul:0.1)+VarSaw.ar(90.5,0,0.5*LFTri.kr(0.2).range(1,1.2),mul:0.1)+VarSaw.ar(90.8,0,0.5*LFTri.kr(0.3).range(1,1.2),mul:0.1);

sig=HPF.ar(sig,200);

sig=FreeVerb.ar(sig);

Out.ar(out,sig!2)

}).add;

SynthDef(\pad,{arg out=0,f=0;

var sig=Array.fill(3,{|n| SinOsc.ar(f*(n+1),0,0.05/(n+1))}).sum;

var env=EnvGen.kr(Env([0,1,1,0],[10,5,10]),gate:1,doneAction:2);

sig=LPF.ar(sig,3000);

Out.ar(~b,sig*env!2)

}).add;

SynthDef(\del,{arg out=0;

var sig=CombC.ar(In.ar(~b,2),0.4,TRand.kr(0.05,0.3,Impulse.kr(0.1)),7);

Out.ar(out,sig);

}).add;

)

y=Synth(\del);

x=Synth(\synth);

t=fork{

inf.do{

Synth(\pad,[\f:[48,52,53,55,57].choose.midicps]);

23.wait;}

};

q=fork{

inf.do{

Synth(\playbuff,[\in:~x[rrand(0,20).asInteger],\r:[-1,1].choose,\p:rrand(-0.5,0.5),\out:[0,~b].wchoose([0.92,0.08])]);

rrand(0.5,3).wait}

}

t.stop;

q.stop;

x.free;

y.free;

s.quit;

28:

In this post I’ll not show you any code, but I want to talk about coding, instead, in particular about “live coding”.

All the various codes you have seen on this blog share a common feature: they are “static”.

I’ll try to explain it better.

When you write a code in a programming language, you may have in mind the following situation: you write down some instructions for the machine to execute, you tell the compiler to compile, and wait for the “result”. By result here I don’t mean simply a numerical or any other output which is “time independent”: I mean more generally (and very vaguely) the process the machine was instructed to perform. For instance, an animation in Processing or a musical composition in SuperCollider is for sure not static by any mean, since it requires the existence of time itself to make sense. Neverthless, the code itself, once the process is happening (at “runtime”), it is static: it is immutable, it cannot be modified. Imagine the compiler like an orchestra director, who instructs the musicians with a score written by a composer: the composer cannot intervene in the middle of the execution, and change the next four measures for the violin and the trombone. Or could he?

Equivalentely, could it be possible to change part of a code while it is running? This would have a great impact on the various artistic situations where coding it is used, because it would allow to “improvise” the rules of the game, the instructions the machine was told to blindly follow.

The answer is yeah, it is possible. And more interestingly, people do it.

According to the Holy Grail of Knowledge

”Live coding (sometimes referred to as ‘on-the-fly programming’, ‘just in time programming’) is a programming practice centred upon the use of improvised interactive programming. Live coding is often used to create sound and image based digital media, and is particularly prevalent in computer music, combining algorithmic composition with improvisation.”

So, why doing live coding? Well, if you are into electronic music, maybe of the dancey type, you can get very soon a “press play” feeling, and maybe look for possibilities to improvise (if you are into improvising, anyways).

It may build that performing tension, experienced by live musicans, for instance, which can produce nice creative effects. This doesn’t mean that everything which is live coded is going to be great, in the same way as it is not true that going to a live concert is going to be a great experience. I’m not a live coder expert, but I can assure you it is quite fun, and gives stronger type of performing feelings than just triggering clips with a MIDI controller.

If you are curious about live coding in SuperCollider, words like ProxySpace, Ndef, Pdef, Tdef, etc. will come useful. Also, the chapter on Just In Time Programming from the SuperCollider Book is a must.

I realize I could go on blabbering for a long time about this, but I’ll instead do something useful, and list some links, which should give you an idea on what’s happening in this field.

I guess you can’t rightly talk about live coding without mentioning TOPLAP.

A nice guest post by Alex Mclean about live coding and music.

Here’s Andrew Sorensen live coding a Disklavier in Impromptu.

Benoit and The Mandelbrots, a live coding band.

Algorave: if the name does suggest you that it’s algorithmic dancey music, then you are correct! Watch Norah Lorway perform in London.

The list could go on, and on, and on…

18:

This code came out by playing with particle systems in Processing.

I have talked about particle systems in the post before in the context of generating a still image. In this particle system there is an important and conceptual difference, though: the number of particles is not conserved. Each particle has a life span, after which the particle is removed from the system: this is done by using ArrayList rather than a simple array. In the rendered video below, you will see clearly that particles start to disappear. The disappearing of particles could have been in principle obtained by simply gradually reducing the opacity of a given particle to 0, rather than removing it: in other words, the particle is still there, it’s only invisible. In the particle system I have used, the two approaches make no difference, apart from saving computational resources (which still is a big deal!), since the particles do not interact among each other. If inter particle interactions were present, the situation would be completely different: an invisible particle would still interact with the others, hence it would not be really “dead”.

Believe it or not, to accept the fact that the number of particles in a system might not be conserved required at the beginning of the 20th century a huge paradigm shift in the way physical systems, in particular at the quantum scale, are described, giving a good reason (among others) to develop a new physical framework, called Quantum Field Theory.

import processing.opengl.*;

int N=500*30;

ArrayList<Part> particles= new ArrayList<Part>();

float t=0;

float p=1;

float centx,centy,centz;

float s=0.01;

float alpha=0;

float st=0;

void setup(){

size(500,500,OPENGL);

background(0);

directionalLight(126, 126, 126, 0, 0, -1);

ambientLight(102, 102, 102);

centx=width/2;

centy=height/2;

centz=0;

for (int i=0;i<N;i++){

float r=random(0,500);

float omega=random(0,360);

particles.add(new Part(new PVector(width/2+r*sin(radians(omega)),height/2+r*cos(radians(omega)),0)));

};

for (int i=0;i<particles.size();i++){

Part p=particles.get(i);

p.display();

};

};

void draw(){

if (random(0.0,1.0)<0.01) {

t=0;

st=random(0.002,0.003);

p=random(1.0,1.3);

};

s+=(st-s)*0.01;

background(0);

directionalLight(255, 255, 255, 0, 4, -10);

ambientLight(255, 255, 255);

centx=width/2+400*abs(sin(alpha/200))*sin(radians(alpha));

centz=0;

centy=height/2+400*abs(sin(alpha/200))*cos(radians(alpha));

for (int i=particles.size()-1;i>=0;i--){

Part p=particles.get(i);

p.run();

if (p.isDead()) particles.remove(i);

};

t+=0.01;

alpha+=5;

};

%%%%Define the class Part

class Part{

PVector loc;

PVector vel;

float l;

float lifesp;

Part(PVector _loc){

loc=_loc.get();

vel=new PVector(random(-0.5,0.5),random(-0.5,0.5),0.1*random(-0.1,0.1));

l =random(2.0,5.0);

lifesp=random(255,2000);

};

void applyForce(float t){

PVector f= newPVector(random(-0.1,0.1)*sin(noise(loc.x,loc.y,t/2)),random(-0.1,0.1)*cos(noise(loc.x,loc.y,t/2)),0.01*sin(t/10));

f.mult(0.1*p);

vel.add(f);

PVector rad=PVector.sub(new PVector(centx,centy,centz-50),loc);

rad.mult(s);

rad.mult(0.12*(l/2));

vel.add(rad);

vel.limit(7);

};

void move(){

loc.add(vel);

lifesp-=1;

};

void display(){

noStroke();

fill(255,random(130,220));

pushMatrix();

translate(loc.x,loc.y,loc.z);

ellipse(0,0,l,l);

popMatrix();

};

void run(){

applyForce(t);

move();

display();

};

boolean isDead(){

if (lifesp<0.0){

return true;

} else {

return false;

}

}

};

Here you find a render of the video (which took me quite some time…), which has being aurally decorated with a spacey drone, because… well, I like drones. 😉

The point is that Lindenmayer came to realize that these iterative systems can describe nicely the growth of many types of plants and their branching structures. For instance, the example above describes the growth of algae. The truly surprising thing, also, is that L-systems can be used to generate all* the types of known fractals, like Mandelbrot, Koch curve, the Sierpinski triangle, etc., when the alphabet is appropriately geometrized. So. in a nutshell, L-systems are very cool things. 😉

The point is that Lindenmayer came to realize that these iterative systems can describe nicely the growth of many types of plants and their branching structures. For instance, the example above describes the growth of algae. The truly surprising thing, also, is that L-systems can be used to generate all* the types of known fractals, like Mandelbrot, Koch curve, the Sierpinski triangle, etc., when the alphabet is appropriately geometrized. So. in a nutshell, L-systems are very cool things. 😉